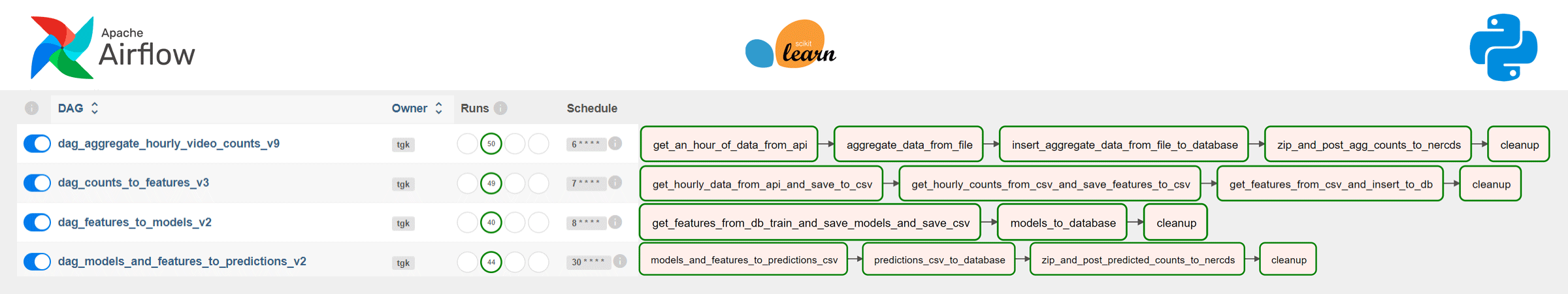

The process of predicting vehicle and pedestrian counts is efficiently orchestrated using Apache Airflow and scripted in Python to create a robust and automated system. This entire predictive journey is split into four distinct Data Pipelines (DAGs), each playing a role in preparation of data, forecasting models and managing the resulting predictions.

The first pipeline is dedicated to aggregating high-resolution but inherently volatile data into more stable and interpretable hourly sums. This initial transformation enhances the data's suitability for further analysis and machine learning model training.

The second pipeline takes the aggregated data and crafts it into datapoints ready for training machine learning models. These datapoints encapsulate a sequence of the previous 24 values, an engineered representation of time that converts 24-hour values into four temporal distances, the day of the week represented as a one-hot encoded feature, and finally, the target value, which is the most recent count reading. Datapoints constructed in this way are stored in a feature store database for easy retrieval and usage in subsequent phases.

The third pipeline harnesses the power of the feature store by reading datapoints for each unique combination of location, direction, and vehicle class individually. These datapoints serve training and evaluation of baseline machine learning regression models, which include Random Forest, Gradient Boosting, and Support Vector Regression, courtesy of the scikit-learn package. During the training process, a data folding technique is employed where the training and evaluation is performed multiple times on randomly shuffled dataset, and model performance is assessed using the Mean Absolute Percentage Error metric to discern the best-performing model. The culmination of this phase is the storage of the best model as a binary object in a database, ready to be utilised for predictions.

The fourth and final pipeline leverages the power of the latest models. These models are deployed to make predictions on new data, and the predictions are not only stored in a database for future reference but also seamlessly uploaded to the NERC-DS portal, ensuring that the latest count predictions are readily accessible to users.